A crash course in AI terms (machine learning, diffusion models) in 6 minutes or less

Understanding a few terms helps us understand and use AI better.

AI feels like magic, but it isn’t. Understanding a few terms and definitions can help use understand and use AI more effectively.

For example let’s look at a description of GPT3:

“GPT3: An AI language model created by OpenAI. GPT3 is a deep learning algorithm that can generate human-like text without being specifically trained for any particular task.”

Even in that simple definition there are loads of terms we might not understand like:

model

deep learning

algorithm

trained

Let’s fix that. Here is short, simply explained glossary of relevant AI terms and specifically how they relate to each other.

What is Artificial Intelligence (AI)?

AI is the simulation of human intelligence in computers.

AI lets computers recognize a situation, and take actions or make predictions that have the best chance of achieving a specific goal.

For example, smart cruise control in a car is AI. A computer programmed to recognize the situation of traffic speeds, to achieve the goal of not touching other cars.

The computer uses an AI algorithm (defined below) to recognize when traffic is slowing down, recognize if their car is approaching the other cars too fast, and then take an action like braking, to avoid touching another car.

People use the terms AI and Machine learning (defined below) interchangeably, because machine learning is where most of the AI breakthroughs are happening but you can have AI without machine learning.

So that leads us to the next question,

What is an algorithm?

An algorithm is a set of step-by-step instructions on how to achieve a specific task, such as recognizing an object in a picture, or predicting the price of tuna.

Algorithms are the foundation of AI (and computers in general), and can be used to solve problems, identify patterns, and automate tasks.

The most popular techniques and algorithms for creating AI right now are machine learning techniques. Which leads us to,

What is Machine Learning (ML)?

Machine learning, a subset of AI, is the concept that computer programs can automatically learn from, and adapt to new data without being assisted by humans. Using machine learning processes, computers can automatically learn and adapt to new data without being directly programmed by humans.

Machine learning algorithms train (defined below) models (defined below) based on examples of labeled data called training data (defined below).

We give the computer a ton of data, and a way to test how good it is doing, and let it change lots of settings to get better at what we want it to do.

What is a machine learning model?

A machine learning model is a set of data and procedures that can be used to get a result from input data.

For example an auto cruise control model is given data from car sensors, and does math on it to make predictions of how fast the users car should be going.

The model is a representation of the process which input data is put through to get an output.

For example a donut shop model, would take raw ingredients as input, and output donuts. The model would include the steps need to be performed on the inputs (mix with water, kneed, fry, frost) as well as parameters (define below) for each step (fry for 3 minutes at 400 degrees F).

The key difference in machine learning from other forms of AI, is the machine learning model is not coded by humans. Instead the humans create the process, give process training data (defined below), and the model is trained (defined below) so the model can complete the task the model was designed for.

When someone releases a model like Stable Diffusion or GPT3, the model is the “thing” that is created after running machine learning algorithms on training data. The model represents the rules, numbers, and structures required to take inputs, and create outputs.

What is training data?

Training data, is a bunch of pairs of items. Each training data item has two parts, 1.) the input, and the 2.) expected output.

For example a set of training data of images would be a lots of pictures with a label of what they are.

If we wanted to create a model that could identify which images were of cats, we would get a set of training data that includes pictures of cats and not cats, then train (defined below) the model on our training data.

What is training in machine learning?

Training is a process of feeding labeled data to an algorithm, getting the result, and then and instructing the model how closely its output matched the expected or desired output.

This is done again and again, each time the computer doing math to figure out how to adjust internal settings or numbers. These settings or internal numbers are called weights or parameters (defined below).

The computer adjusts parameters to figure out how to get a close as possible to a goal.

Let’s say our goal is we want to correctly identify 99% of apple photos.

We setup our cat detecting model, then run all our training data through the model. It predicts which images are cats and which are not. We use a math function to tell the model how it did. A simple example is number of correctly identified cats divided by total number of cat photos. Let’s it correctly identified 50% of the cats.

The model then runs a bunch of math to figure out how to adjust the parameters to improve on that result, and then trains again, running all the training data through the model, trying to identify cats and then using the same math function to figure out how good it did. Let’s say it now identifies 60% of the cats correctly. It has improved! And most importantly, no human was directly programming it to get better. The machine made some changes and improved without direct human programming.

The machine is learning to adjust the parameters of its model to get better and better at identifying cats.

When the model hits 99% accurate, the model is trained, meaning it contains a bunch of parameters which are plugged into a bunch of algorithms into which we can put our input data (a picture) and the output is whether that picture is a cat, and it gets it correct 99% of the time.

What is a parameter?

Parameters or weights and biases are the internal numbers in a machine learning model or neural network (defined below) that are changed to alter how the model behaves.

Imagine a model is a radio. Parameters are like the knobs on the radio, which are tuned to achieve a specific behavior like you would tuning in to a specific frequency. Parameters are not set by the creator of the model, rather, the values are determined or learned by the training process automatically.

What is a neural network?

There are tons of different machine learning training models. Neural Networks are one of the more popular ones.

The name and structure of neural networks are inspired by the human brain, mimicking the way that biological neurons signal to one another.

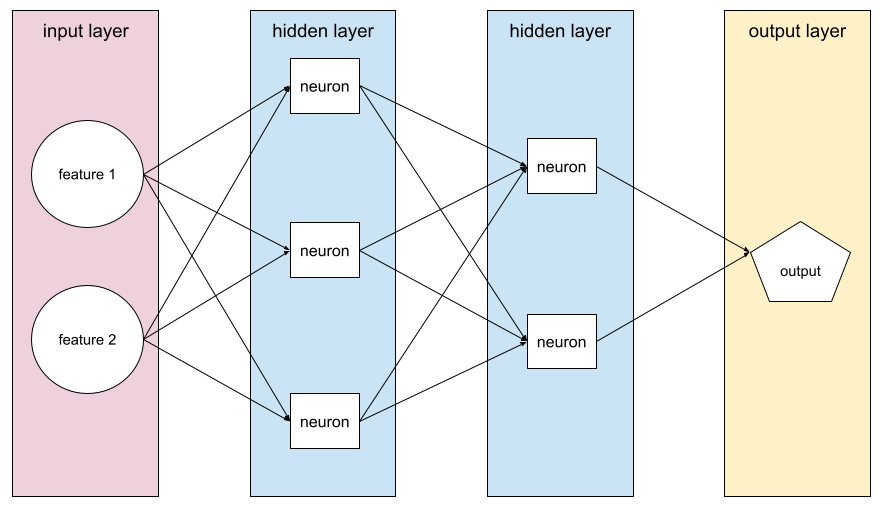

Neural networks are comprised of a layers, containing an input layer, one or more hidden middle layers, and an output layer. Each node, or artificial neuron, connects to all the other neurons of the next layer, and has an associated weight/parameter and threshold/bias.

The neurons are where we store the parameters that the training goes through and changes to get better at a task.

If the output of any individual neuron is above a set value, that neuron is activated, sending data to the next layer of the network. Otherwise, no data is passed along to the next layer of the network.

The neurons in the next layer calculates the weighted sum of input values, then passes that number as an input to a math function, called an activation function. The activation function runs, and if the number is above a set value, it passes the data on again.

Adding layers of neurons enables neural networks to add more parameters to the model which allows the model to fit more complex functions which allows it to do more interesting things.

There is a wonderful video linked at the bottom of this article that explains neural networks visually.

What is deep learning?

Deep learning is machine learning done using “deep” neural networks, or neural networks that have multiple (more than 2) layers. You cannot do deep learning without neural networks.

Deep learning models are trained using large amounts of data in order to find patterns and make predictions and are a key technology behind recent advances in AI.

What is a generative model?

A generative model is a model that is used to generate new data based on existing training data. It is often used to generate new images or videos from a set of training data.

There are many types of generative models, including Generative adversarial networks (GAN), Variational Autoencoders (VAE), Flow-based, and Diffusion models.

Diffusion models (defined below) have become very popular lately.

What is a Diffusion Model?

Diffusion Models are a subset of generative models.

Diffusion Models work by destroying training data by adding Gaussian noise, and then learning to recover the training data by reversing the noising process.

After training, we can use the Diffusion Model to generate new data by passing random noise and a new description of what we want through the model.

Text-to-image models are diffusion models.

What is GPT3?

Let’s see how much we have learned. Going back to the original description of GPT3:

“GPT3 (Generative Pre-trained Transformer 3) is an AI language model created by OpenAI. GPT3 is a deep learning algorithm that can generate human-like text without being specifically trained for any particular task.”

Breaking that down:

GPT3 is AI so it mimics human actions to achieve specific goals.

GPT3 is a generative model so it creates new text from text it was trained on.

GPT3 uses a deep learning model, so it uses a many layered neural network.

GPT3 can create text for many situations, even ones it wasn’t trained for which means it can create text for a specific goal without having seen a lot of labeled training data, and tweaking its own parameters to get good at a specific task.

Now we can understand why GPT3 is so cool. Instead of being just one model trained for one task, like detecting if a chunk of text is spam, GPT3 is one model trained for a huge number of tasks, meaning it can create text for many different situations without having to be retrained.

Researchers don’t have to train one model to write blog posts, and then a separate model to write poems, the GPT3 model can do both.

Let’s test our newfound knowledge again by answering the last question,

What is Stable Diffusion?

The description of Stable Diffusion from Wikipedia is:

‘Stable Diffusion is a latent diffusion model, a variety of deep generative neural network’

We know most of those terms now.

Stable Diffusion is AI, meaning it mimics human intelligence and complete tasks. For Stable Diffusion the task is ‘take this text prompt, and create a good looking image’.

Stable Diffusion uses the AI techniques of machine learning meaning we don’t program it explicitly, we give a computer a lot of training data and let it program itself.

Stable Diffusion uses a deep (more than 2 layer) neural network meaning we have a bunch of connected nodes that do math on the input and then put out an output which is used in the next layer of nodes. There are more than 2 layers of neurons.

Stable Diffusion is a generative model meaning it is a set of data and procedures that can be used to get new output from input. The model has a system of algorithms and data to take our text prompt and run it through a process to create a new (generated) image.

Stable Diffusion is a diffusion model, meaning it adds and removes noise to create images.

Not so bad right?

If you want to dig more deeply into neural networks, I would highly recommend watching this awesome video series from 3Blue1Brown

Three Links

3 interesting links a week

Paper - 3D Magic Pony: Image to 3D - Give the AI an image and it can create a 3D model of the animal. This is pretty cool and shows that image to 3D is coming sooner than most think.

Newsletter - The Prompt an AI newsletter by Anita Kirkovska - AI is moving so fast, Anita sends a newsletter a couple times a week with interesting links and learnings.

Article - Stable Diffusion 2 is not what users were expecting - Some users are finding SD2 worse than 1.5. This article breaks down why this is happening. Hint they use a different CLIP system.