What is Dreambooth and how to use it?

When the model knows who you are, you can put yourself in art, and things get interesting.

(if you are reading this in Gmail, it has a lot of images. Click "View entire message" at the bottom to open the whole thing or just view it online)

Stable Diffusion is a pretty incredible open source text to image model.

Enter text like ‘cat in intricate armor’ and it can make amazing images.

Because it is open source, it has led to an explosion of art and tools and experiments over the last couple of months.

(image source tweets, both linked and embedded below)

But can you do it again?

One big issue with large language models is repeatability. Or in other words, controlling the subject’s appearance and identity using text is very hard. If you want multiple images of various things with the same subject it can be very difficult.

Pictures speak louder than words, so here is an example.

You are creating images for a story about a:

knight, who

fights then

befriends a dragon.

We want three images.

A knight

a knight fighting a dragon, and

a knight riding a dragon.

Let’s call the knight Sir Dagger.

It is easy to get cool images of knights using Stable Diffusion.

First you head to Lexica and search knight. Scroll until you find an image style you like. Copy the prompt. Then use Stable Diffusion to make an image you like.

The prompt I used was:

‘highly detailed vfx portrait of a brave and noble knight, stephen bliss, unreal engine, greg rutkowski, global illumination, detailed and intricate environment’

Sir Dagger is ready!

Then in our story the knight fights a dragon!

So you generate an image of the knight fighting a dragon. Prompt would be:

‘highly detailed vfx portrait of a brave and noble knight fighting a dragon, stephen bliss, unreal engine, greg rutkowski, global illumination, detailed and intricate environment’

And here is the result.

Sir Dagger looks… a little different. No helmet, wild hair, and different armor.

This is because the knight is not the same. Stable Diffusion doesn’t know how we want Sir Dagger to look, it just creates an image of what it thinks a knight looks like. But that knight could be any variation of a knight.

We continue, and in our story, the knight and dragon are friends. Prompt: ‘highly detailed vfx portrait of a brave and noble knight riding a dragon, stephen bliss, unreal engine, greg rutkowski, global illumination, detailed and intricate environment’

Result:

Sir Dagger is now gold and worse has merged with the dragon. Not what we wanted.

3 prompts for a knight, 3 completely different knights. The problem is worse with object without a recognizable major trait like armor.

And the problem because nearly impossible if we want to make art with a specific likeness.

How can we create art with a specific likeness?

But what if we want to make art and images with the likeness of someone we actually know?

What if you wanted to tell a story about yourself? Or a friend, who just happens to look a lot like Matt Damon?

How would you describe Matt Damon’s, I mean your friends face to Stable Diffuion?

Let’s try.

Prompt: ‘handsome male, high forehead, strong jaw, strong nose, short brown hair holding a sword and a microscope, highly detailed, digital painting, artstation, concept art, smooth, sharp, focus, illustration. art by artgerm and greg rutkowski and alphonse mucha’

Results:

Yes they have the vague handsome movie star look, but you wouldn’t think any of these are Matt Damon. If we could train the AI on Matt Damon, it would be able to make images that look more like him.

And we can test this because Stable Diffusion does recognize Matt Damon. So lets try that.

Prompt: ‘handsome Matt Damon, holding a sword and a microscope, highly detailed, digital painting, artstation, concept art, smooth, sharp, focus, illustration. art by artgerm and greg rutkowski and alphonse mucha’

As you can see, the AI knows how to describe Matt Damon, better than I know how to describe Matt Damon. Or more accurately, the AI was trained on images that include Matt Damon, so it can reproduce him better than my text prompts.

Enter… Dreambooth

Dreambooth allows you to take your own images, and train the AI to recognize an object (person, image, thing etc)

Dreambooth was created by Google Researchers. Here is their Github, and official paper and a Twitter thread where they introduced their research.

Since Stable Diffusion is open source, people took the Dreambooth paper and implemented it. And here is where the open source train starts rolling and gets interesting.

I think this Github https://github.com/XavierXiao/Dreambooth-Stable-Diffusion is the first implementation of Dreambooth on Stable Diffusion.

Joe Penna a director and YouTuber found the above Github, and made his own tweaks to train on faces better.

https://github.com/JoePenna/Dreambooth-Stable-Diffusion

Joe Penna is friends with Niko Pueringer. Niko runs a large YouTube channel and they used Joe’s implementation to create this fun video which you should definitely watch.

The video blew up and tons of people started trying to use Dreambooth. But as of September 2022, using Dreambooth was difficult. Joe’s version is a fork of a fork, and requires a super large GPU and some amount of Python scripting knowledge.

People started to get it working, but it was not easy.

Dreambooth Update Feb 2023

Dreambooth is now MUCH easier to run on your own computer.

This video lays it out. You still need a large GPU but it can be done if you just follow the step by step guide.

It is much easier to run Dreambooth now, but tons of Dreambooth services have sprung up. Enter the…

Dreambooth Services

One of the first companies to make it easier to use Dreambooth is Astria. You can pay $3 to train the model on your 20 images and you get images back and the checkpoint file so you can run it on your own. https://www.strmr.com/

People started to get great results with it.

Then two indie hackers Pieter Levels and Danny Postma both found this Astria and noticed it had an API, and started to experiment with creating different versions and art styles of your avatar.

Levels released his first.

He sold about 170 packs for $30 dollars each in about a day for $5k in revenue.

Danny released his site a day later. You can train your own Dreambooth model for $15 dollars.

Check it out here profilepicture.ai

The future is easy to use Dreambooth Apps

Right now Dreambooth and Stable Diffusion are hard to use. Command line, Github, Hugging Face models, you name it. Nothing the average person could use. I consider myself a decent programmer and I can’t get anything to work.

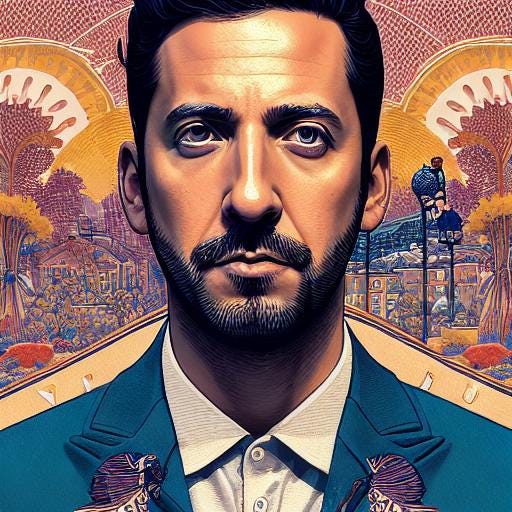

I actually paid for both Astria and AvatarAI but the APIs and GPUs are overloaded and nothing worked the entire time I was writing this article. Starting to get a few images back. Some are fun. Some don’t really look like me, but some are interesting.

But coders and startups are making Dreambooth easier to use and access, and I predict that in a few months there are going to be hundreds of these types of apps.

I call them AI Avatar vending machines.

You will put in about 20 picture (or less) and some amount of money (because GPUs are not cheap) and you get out art with your trained likeness.

Dreambooth Apps Update Feb 2023

I have never been more right in a prediction.

The Lensa app went viral. For about 3-4 dollars you could train a Dreambooth model and get back a bunch of AI images.

Lensa made $25 million dollars.

Then TikTok Filters started to have AI filters built in. TikTok isn’t using Dreambooth, but the effect is similar.

Now there are legitimately hundreds of other Dreambooth apps where you can pay and get images in various styles.

The AI Avatar vending machine trend is in full swing.

Ethical Considerations:

We are in uncharted territory. Someone could take 20 pictures of anyone and start making images of them.

Artists styles are being used (see even my prompts above) and they are not being compensated.

Others have written about the ethics of this type of technology better than me, you should read their work, and be aware that we are all figuring this out.

Links

Dreambooth Paper - https://arxiv.org/abs/2208.12242

Dreambooth Github - https://dreambooth.github.io/

Stable Diffusion Dreambooth - https://github.com/XavierXiao/Dreambooth-Stable-Diffusion

Joe’s version of Stable Diffusion Dreambooth - https://github.com/JoePenna/Dreambooth-Stable-Diffusion

Astria training service $3 - https://www.strmr.com/

Levels service $30 - http://avatarai.me/

Dannys service $15 - http://profilepicture.ai/

Any updates on this? I came in here late and I KNOW there have been a ton of developments since this article was written and the Jan update. Figured I'd ask you first before hunting blindly since you seem to have a good handle on all this...

Interesting article. Can you answer the possibility of AI will be enough to create an application design? I have read an interesting article https://asolytics.pro/blog/post/content-for-mobile-apps about app design. Can you tell me if the power of AI will be enough?