How to generate consistent characters in Midjourney (aka how to fix the biggest problem with generative AI art)

Character consistency and replicability are 🔑

Hey! this is Josh from Mythical AI with this week’s deep dive, into the biggest issue artists face with generative AI art… character consistency.

AI art is amazing. You can enter a prompt and get back amazing images. But if you want to actually use those images, most use cases require being able to create the same character again and again.

Comics, storyboards, children’s books. All of these require consistent characters.

Due to the nature of diffusion models, (explained here) consistency is tough.

If I were creating a webcomic about a ‘scientist who accidentally discovered teleportation’, if I enter ‘female scientist’ as a prompt, and run it a few times, I get completely different characters each time.

Luckily all hope is not lost, and there are a few different ways to solve this.

The solutions encompass a wide range from ‘easy’ to ‘hard’ and due to the differences in the Big 3 image generators (Midjourney, Stable Diffusion, Dalle2) each system has different techniques.

For Dalle, the methods to create consistent characters are more limited, and include:

More prompt details

Outpaint with ‘twin’ prompts

Used standard characters

For Midjourney, we have similar methods to create consistent characters including:

More prompt details

Run variations to get closer to the character

Image prompt (very powerful)

‘Find’ your character in the crowds

Finally, Stable Diffusion has the most extensive methods to create consistent characters including:

More prompt details

Run variations to get closer to the character

‘Find’ your character in the crowds

Textual Inversion

Dreambooth

Model fine tuning

Let’s dive in!

How to create consistent characters in Dalle2

Dalle2 from OpenAI is closed source. We don’t know exactly their process for generating characters.

The short truth is, generating consistent characters in Dalle is VERY difficult and the results are just ok. Midjourney and Stable Diffusion give more consistent characters.

That being said, there are 3 ways to create more consistent characters in Dalle2:

More prompt details

Use generate variations to get closer to your character

Outpaint with ‘twin’ prompts

Used standard characters

1 - More prompt details. (difficult = easy, ok results)

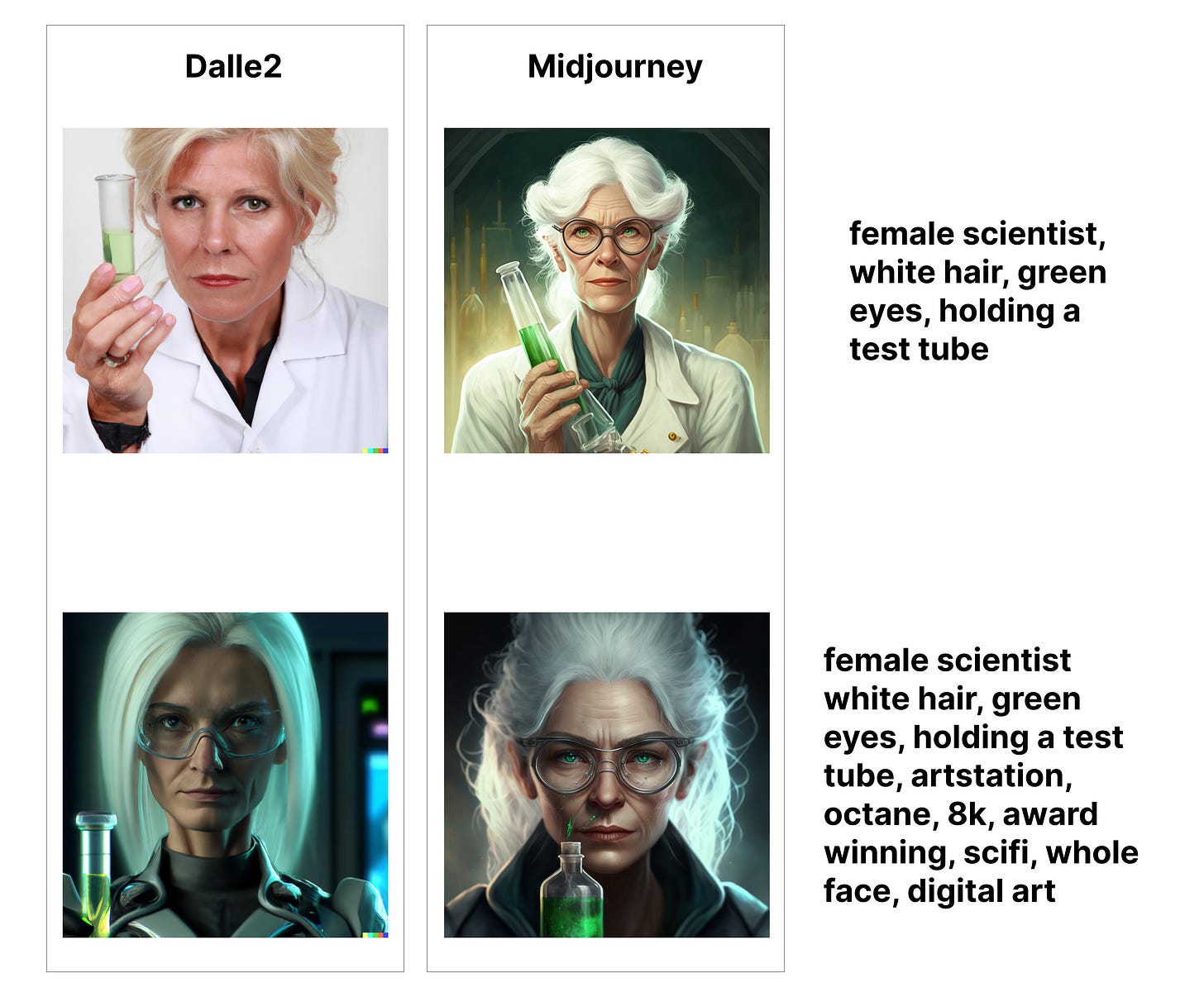

By adding more details to the prompt, you can get characters with more consistency.

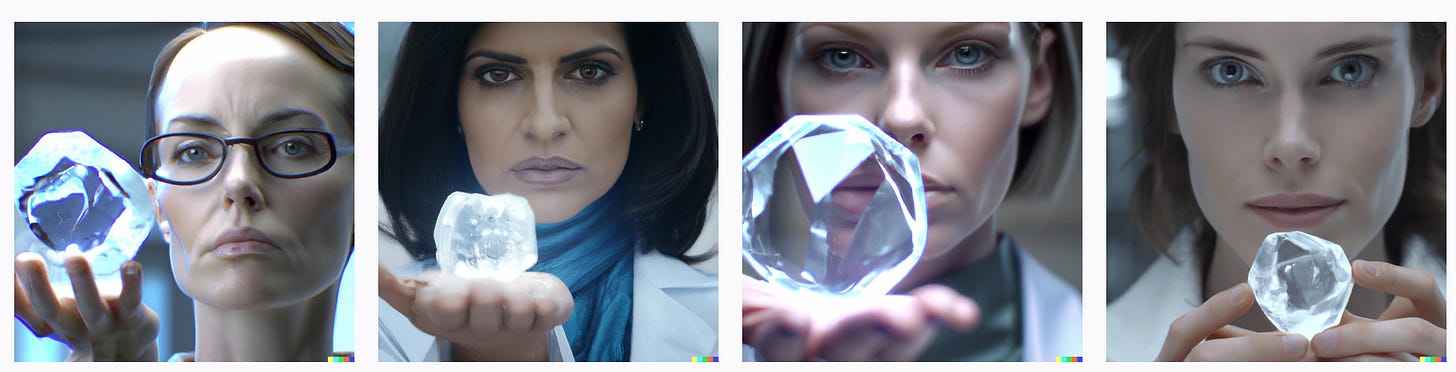

If we use generic prompts, like: ‘female scientist holding a floating crystal of power, unreal engine, octane, 8k, award winning, scifi, whole face’

We get a variety of characters.

By adding more detail to our prompts we can narrow the variations. This prompt: ‘female scientist smiling, blond hair, straight nose, small ears, holding a microscope, unreal engine, octane, 8k, award winning, scifi’ creates a smaller range of characters.

As you can see the results are not perfect, but the characters are more consistent.

Another trick is to open one of the results, and then generate variations from that. Each variation is a slightly different character so by doing that you can sometimes get a character that is more consistent with your original or desired image.

A common question is ‘How do I add prompt instructions to a variation in Dalle?’ The sad answer is, you can’t. You can’t ask Dalle to add an article of clothing or a different piece of lab equipment. You can take that image and run it through the new Instruct2Pix model (detailed explanation and how to use that in a different episode, coming soon) but Dalle doesn’t support prompts on variations.

2 - Use ‘generate variations’

Dalle lets you generate variations of an image. So if you find a character you like, you can generate variations. This restricts somewhat the types of characters produced. Do this enough times and you can find images that look like your original character.

The biggest issue with this is, it is hard to put that character into new situations. You don’t need 10 images of one character in the same scenario, you need 10 images of one character in different scenarios. Variations can help ‘find’ your character again in a new scenario.

One issue Dalle variations have is they seem to get less detailed, and less quality the more variation generations you do.

3 - Outpaint with ‘twin’ prompts

Where you can add prompts in Dalle is outpainting. Dalle still has the best native outpainting capabilities out of the Big 3 Image generators.

How to outpaint in Dalle

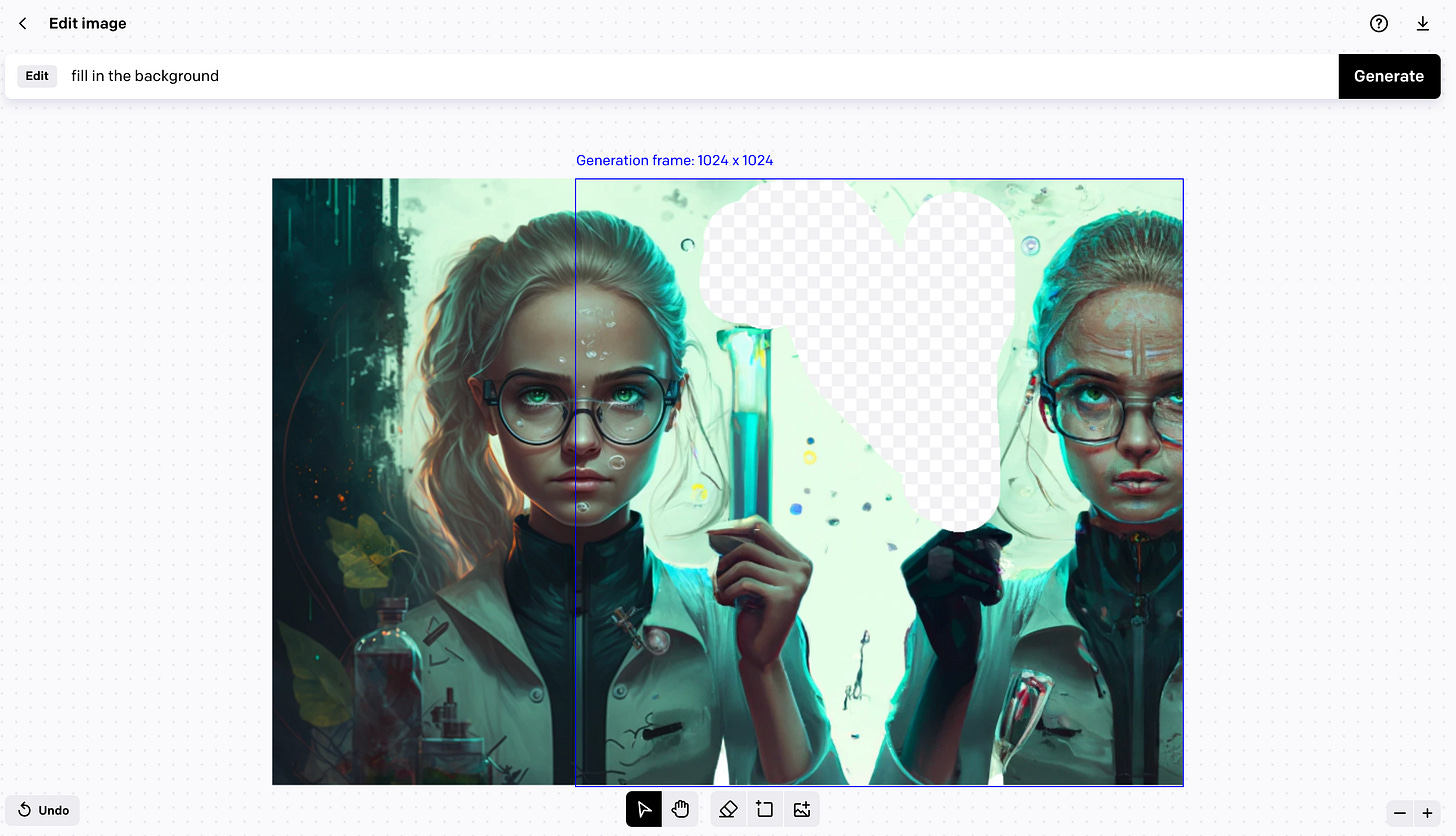

Dalle allows you to upload or generate an image, then start editing it.

You can put a box around the area you want your prompt to affect and then use a brush to delete sections of the image. Anywhere that has a box around it, and is blank, will get filled in with the results of your text prompt.

Twin prompts can give consistent characters

A clever user on Reddit discovered how to use outpainting on Dalle to create consistent characters in this thread.

By giving prompts like ‘twin standing next to’ or ‘brother’ or ‘sister’ you can tell Dalle to create similar consistent characters.

It takes a lot of trial and error, but it can work ok.

Unfortunately, that is the theme with all the Dalle tricks and techniques to get consistent characters, with Dalle they all just work… ok.

The last method to get consistent characters is to:

4 - Used standard characters in Dalle

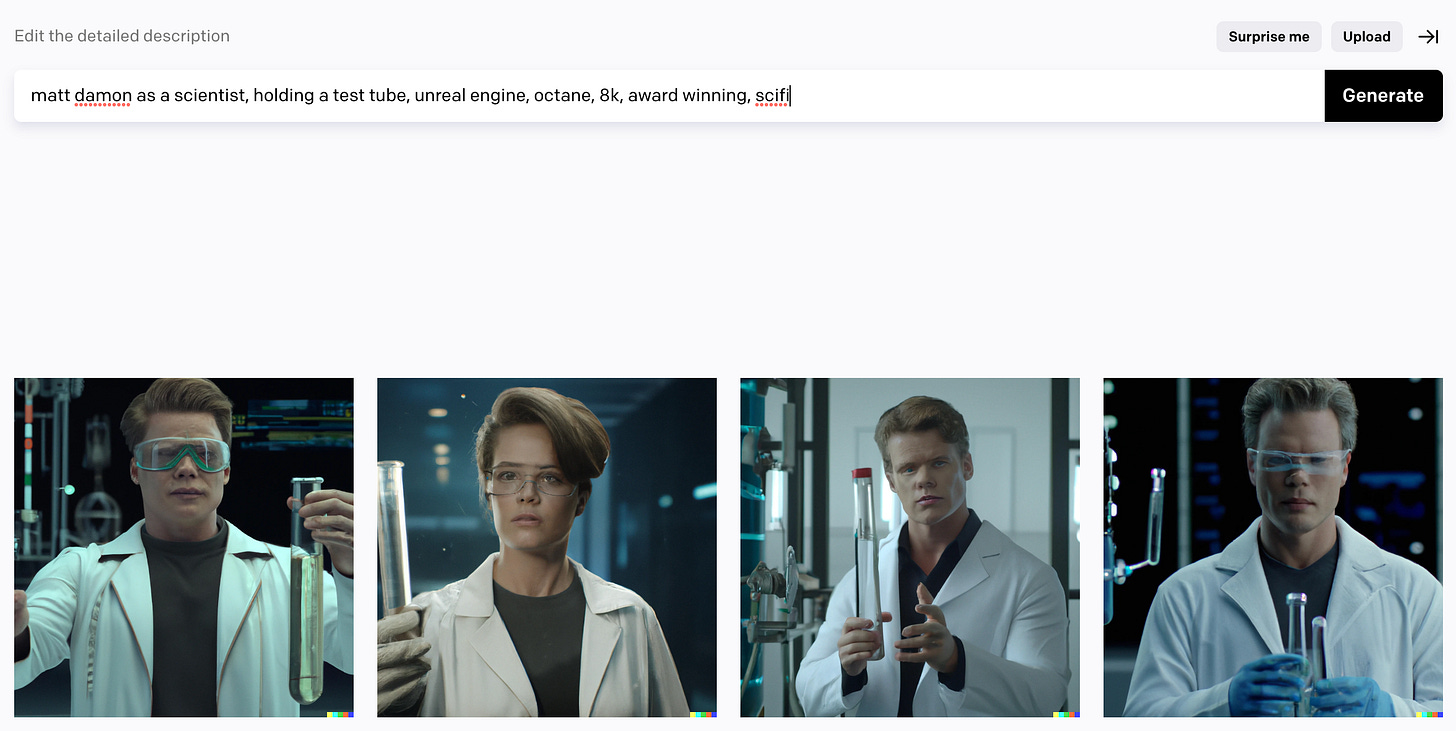

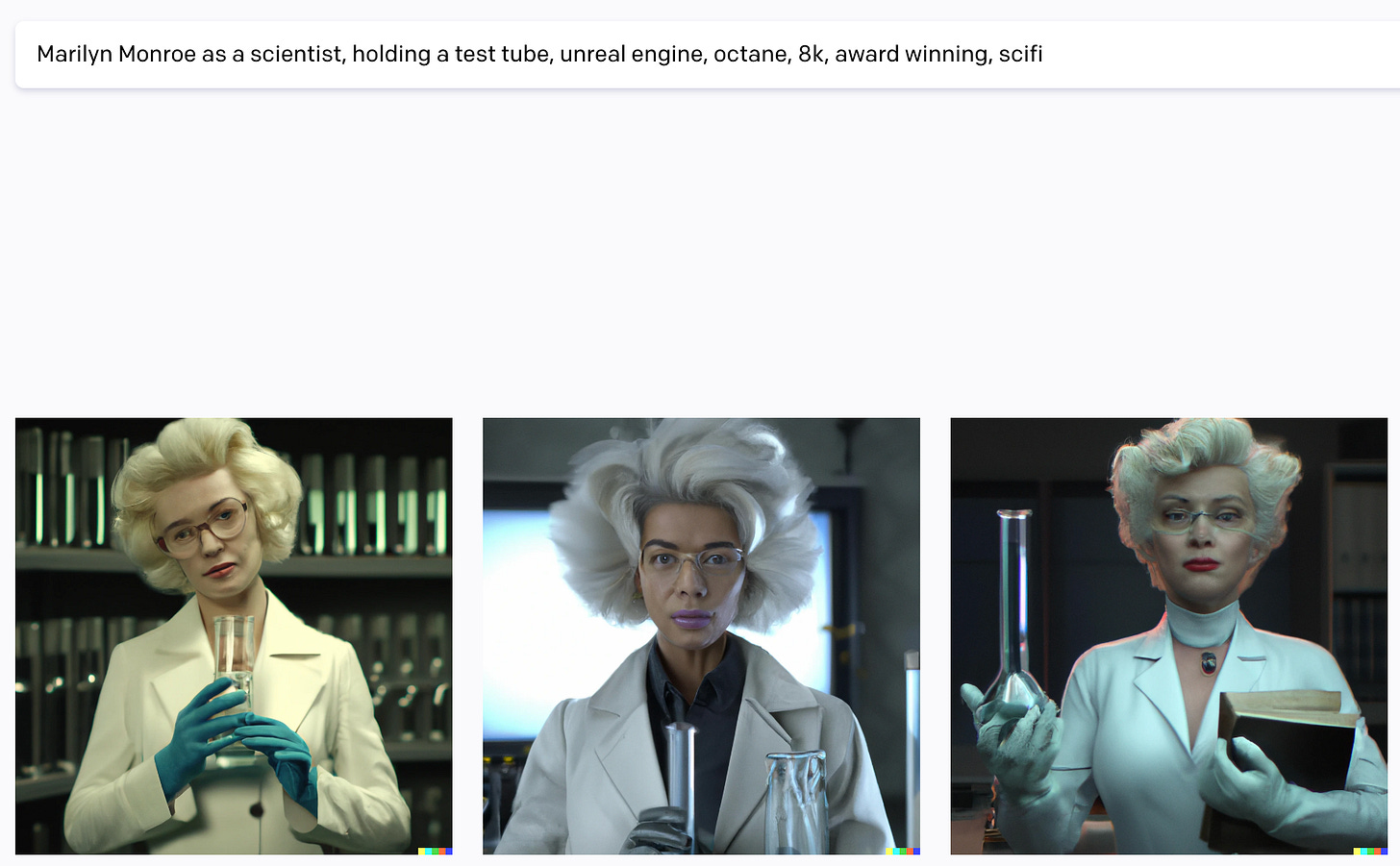

Dalle was trained on millions of images and lots of those included well-known public figures. For example, using Matt Damon as a prompt will lead to more consistent characters because Dalle has been trained on his image.

This has the unfortunate side effect of requiring you to base your characters look on a famous public pre-existing likeness. But it can help your character to be more consistent.

Now let’s move onto the next image generator, Midjourney.

How to create consistent characters using Midjourney

Midjourney can create some fantastic images. Out of the box, they are already more artistic and consistent in some ways than Dalle’s images.

Midjourney does a lot behind the scenes to make the results of your prompt be more stylized and artistic, and a little closer in consistency.

So Midjourney already starts with more consistent characters, but we can improve them still.

Here are four ways to create more consistent characters in Midjourney:

More prompt details

Run variations to get closer to character

Image prompt (very powerful)

‘Find’ your character in the crowds

Let’s start with the first…

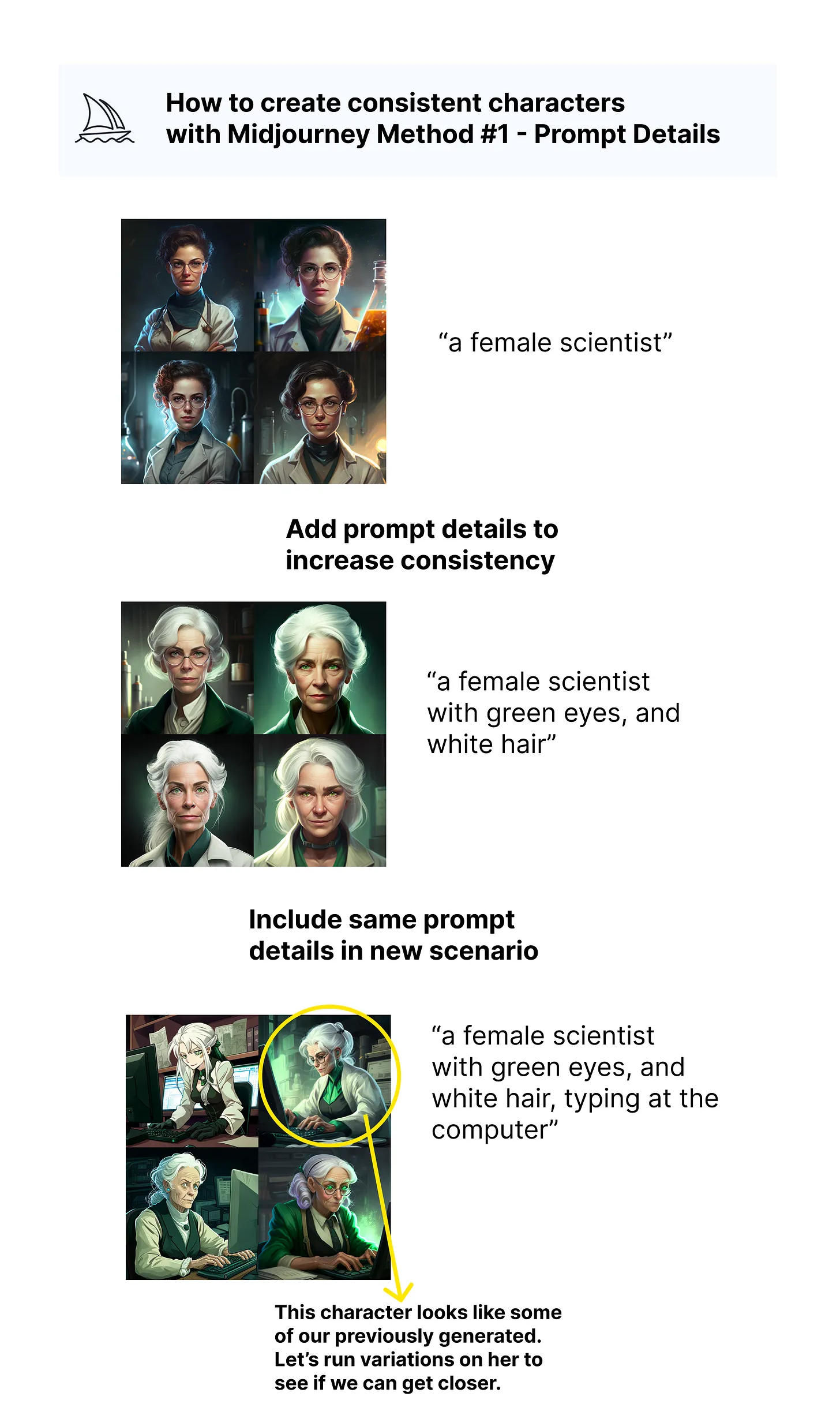

1 - More prompt details

Similar to with Dalle, including more details in our prompt can narrow the range of characters created.

2 - Use variations

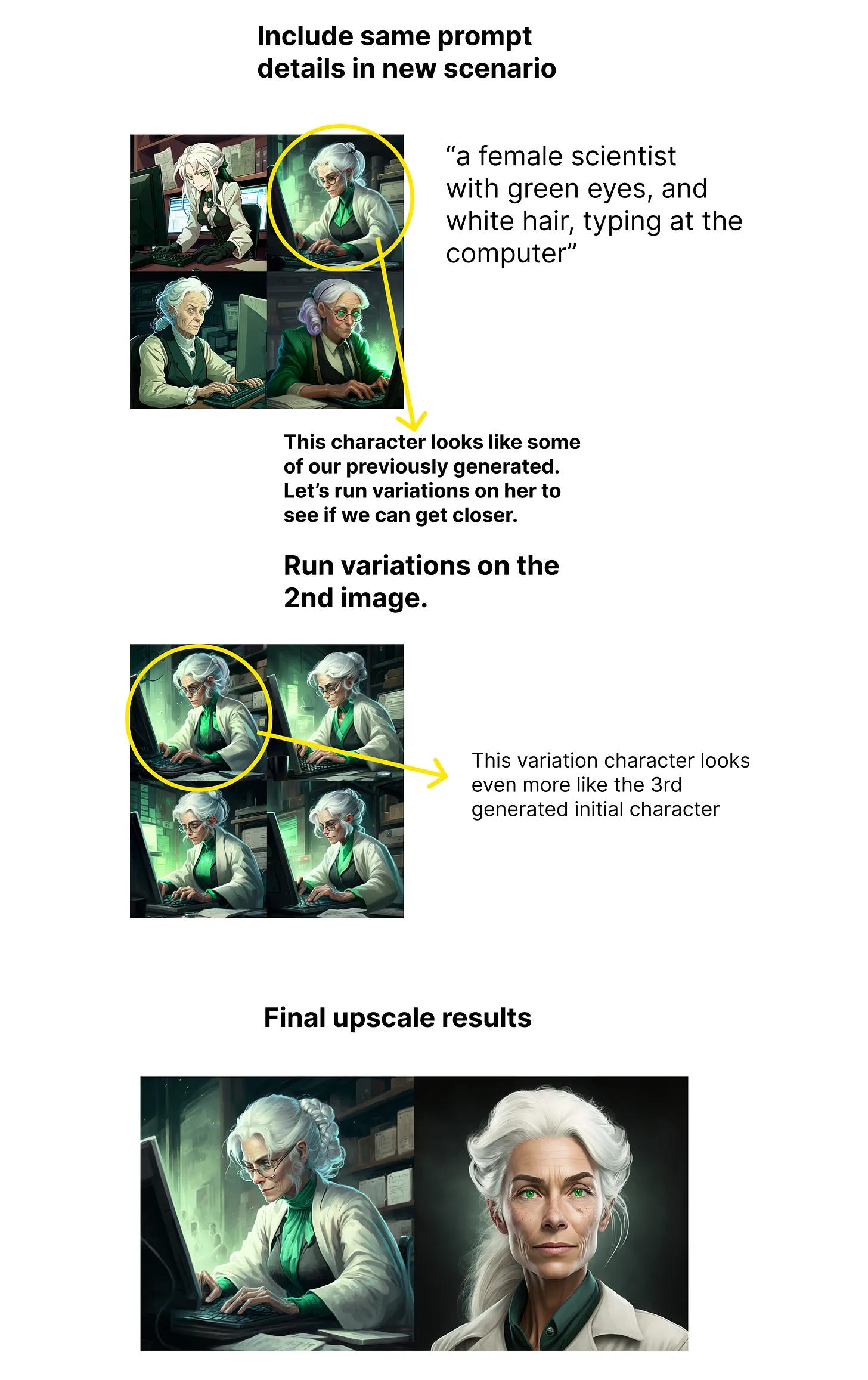

In the image above you can see that when you use the same characteristic prompts in a new scenario, the character can shift a bit. Luckily, Midjourney allows us to create variations on an image.

These seem to work better than Dalle as the variation maintain the same level of quality.

By using the same character prompts in a new scenario, you can then generate variations until you ‘find’ your character again.

Using more details and variations we can get a similar mostly consistent character in different scenarios.

The best part is we can then use these images to get more scenarios using image prompting.

3 - Use image prompts

Image prompts are a super powerful technique in Midjourney. You upload an image to the Discord server, then use the image URL in your prompt.

I covered how image prompt in my Midjourney 101 article if you have questions.

By using the image of our character as an input in the prompt, we can get pretty consistent characters in a wide variety of scenarios.

The last method relies on volume.

4 - Find your character in the crowd

Popular with the #aicinema crowd, you just generate tons of images from a time period with a certain look, and then look for consistent characters in them.

I haven’t used this myself, but search the hashtag on Twitter to find others using the technique.

How to create consistent characters in Stable Diffusion

Stable Diffusion is open source, meaning we can open the box and train it. This makes it much easier to generate consistent characters because we can train Stable Diffusion on our characters and styles.

There are many ways to do this, and this email is already getting long, so I will cover how to create consistent characters in Stable Diffusion next week! We will cover:

Update: How to create consistent characters in Stable Diffusion article is done. Here it is!

In it we cover:

More prompt details

Run variations to get closer to the character

‘Find’ your character in the crowds

Textual Inversion

Dreambooth

Model fine-tuning

Thanks for reading. Let me know in the comments if I missed any techniques. Also if you used these to create a character share it in the comments.

Thanks for such nice post.

Is there any possibility in these models to provide multiple different prompts at once and create bulk images?

Very useful! Some people use "seed" in MJ for consistency. Have you found that it improves your results in any way?